Work & Projects

Goat Simulator 3

@ Coffee Stain North

Goat Simulator 3 is a brand new third-person sandbox adventure game in which you get to become the literal GOAT.

Just like the first Goat Simulator, you'll need to headbutt, lick and triple-jump your way across the giant island of San Angora - this time with all new areas, challenges, and events to discover.

My role & contributions

Technical Game Designer

I joined the team about six months before launch, jumping into the beautiful chaos that is Goat Simulator 3. My main mission? Hunt down bugs, fix broken stuff, and generally make sure the goats caused chaos on purpose — not by accident.

After release, I stuck around to keep things stable ("goat enough") and improve the experience through:

- Ongoing bug fixing and issue wrangling

- Improving physics assets for goats and NPCs (because if something flies across the map, it should at least look intentional)

- Delivering patches and free content updates

DLC: Multiverse of Nonsense

@ Coffee Stain North

In a world where everything went wrong (and it was all your fault) you must now help the guardian of the Multiverse to save it.

Embark on a new epic journey with grandiose quests, questionable characters, conveniently color-coded instability stones, and traverse between different universes while trying to clean up the mess you made before it’s too late.

My role & contributions

Intermediate Technical Game Designer

I was part of the full development cycle for Multiverse of Nonsense, from ideation through release and post-launch support.

Some of my responsibilities and contributions:

- Ideation and concept development

- Prototyping gears, events and interactables

- Designing and implementing events and interactables

DLC: Baadlands Furry Road

@ Coffee Stain North

Play as Baallistic Pilgor, the antihero and survivor of the apocalypse. Roam around the Baadlands on your motorcycle, butt heads with bizarre rival factions, establish your town and harass its citizens, and try to figure out what's behind the mysterious vault. All this while covered in sand.

My role & contributions

Senior Technical Game Designer

I was part of the full development cycle for Baadlands, from ideation through release and post-launch support.

Some of my responsibilities and contributions:

- Ideation and concept development

- Prototyping gears, events and interactables

- Designing and implementing events and interactables

Utility AI Extension

Personal project

An extension to Unreal Engine's Behavior Tree that adds Utility Selector and Action Nodes with considerations.

What it is

I wanted a more reactive and flexible AI so I created a system where the AI can select an action (task) based on how useful it is in the current moment. Utility AI is well suited for this as each action has considerations attached to it that returns a score based on the current world status.

By extending the Behavior Tree we can still use the strengths of behavior trees for certain situations where we need a more controlled behavior. In my system each action takes advantage of the new powerful StateTree asset and has all its behavior contained in the StateTree.

All navigation for the agent is handled in its own seperate StateTree and lives outside the Behavior Tree. This allows actions to be layered on top of movement if needed. An example would be a "Fire weapon" action that can let the agent keep moving towards the target set by an earlier action. An action can send a "stop" event to the movement StateTree to make the player pause movement or set it's own movement location.

Since all actions are state trees, they can gracefully handle interruption on their Exit States. When the utility system finds a higher scoring action, it can tell the current running action's StateTree to abort. When the state tree has handled its exit states, the utility selector choose the new action.

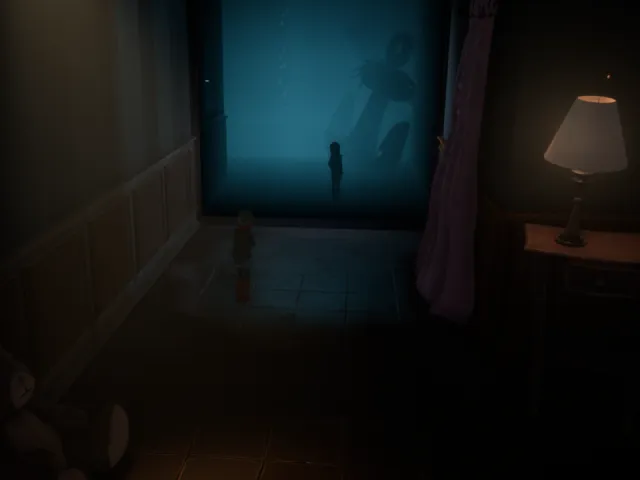

Echo

Futuregames project

Echo is a storydriven adventure side-scroller created by ten developers during four weeks. Inspired by games such as Inside and Little nightmares, experience the story through the mechanic and environment, as you help Mira figure out her past.

My role & contributions

They game was based on a loose concept I had of a mind in a coma, exploring themes of memory and identity. This idea was then further developed and refined in collaboration with the team.

I was mainly responsible for the character movement and interactions. The movement is a custom made physics based system that allows for more realistic and predictable interactions with the environment than with the default Unreal character.

The goal for interacting with objects was to get a fairly realistic movement where it look and feel like you as a player are interacting with physical objects.

Game is available to play for free on Itch.io, so go check it out if you want to experience it yourself!

Nimble Hands

Personal project

Nimble Hands is a game where you as a thief has to navigate tight spaces with your ever growing physics based sack of loot.

What it is

This was part of my submission for Futuregames.

A ten page Game Design Document that describes story, gameplay, unique mechanics, player character, game world, game experience, game mechanics, enemies and challenges, level design, art style, music, sound, target audience and monitization.

I also created the prototypes in the video to showcase the fun factor with a physics based sack. The first prototype was made in Unreal Engine and the more polished version was made in Unity.